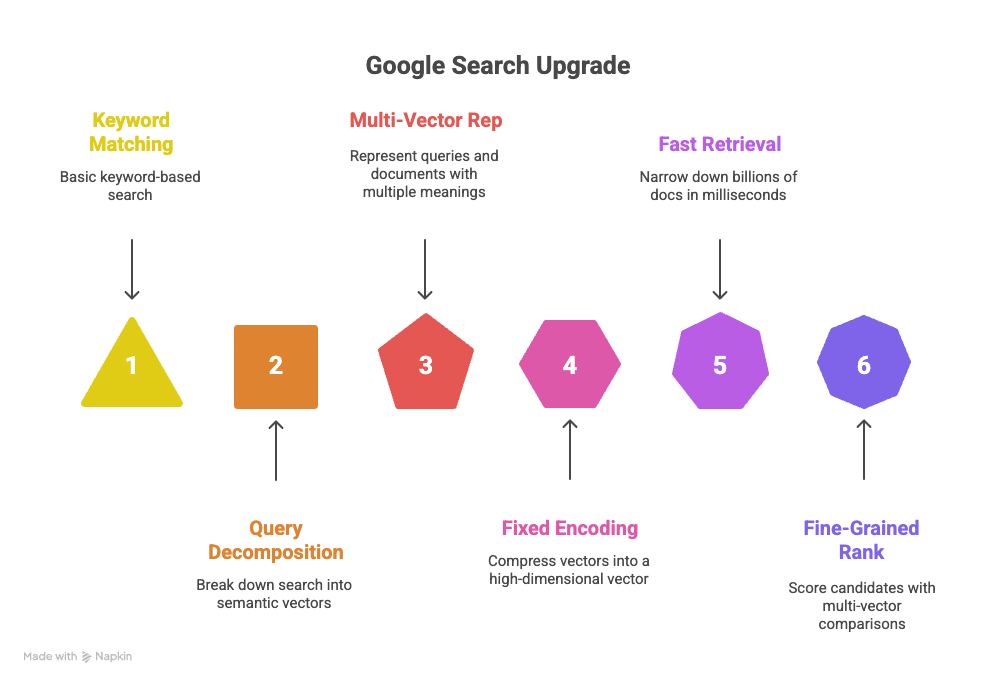

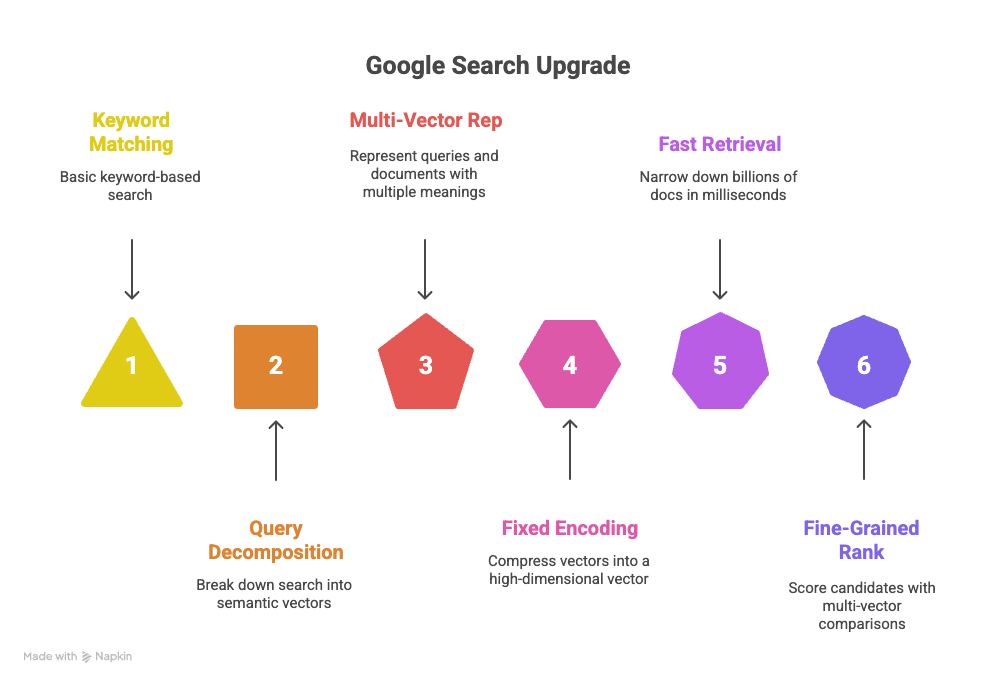

How classic search methods give way to semantic approaches and why MUVERA could be the next stage in the evolution of search engines.

In a world where data is growing exponentially, traditional search engines face challenges in accuracy and speed. Classic methods like TF-IDF and BM25 are effective for keywords but fail to provide semantic understanding. Semantic approaches, including multi-vector search, offer a deeper understanding of content. MUVERA, developed by Google Research, combines the advantages of multi-vector search with the speed of single-vector search, making it a potential breakthrough. Spoiler: MUVERA reduces search complexity, making it faster and more accurate, which could revolutionize search engines.

⚡ In short

- ✅ Key takeaway 1: Classic search methods are limited to keywords, while semantic methods focus on meaning.

- ✅ Key takeaway 2: MUVERA transforms multi-vector search into single-vector search to balance speed and accuracy.

- ✅ Key takeaway 3: The technology has wide applications, from web search to ethical challenges.

- 🎯 You will get: A deep understanding of the evolution of search, the details of MUVERA, and practical tips.

- 👇 Read more below — with examples and conclusions

Table of Contents:

⸻

🎯 Historical Context

Search engines have come a long way from simple keywords to a deep understanding of semantics.

📊 History of database search.

The history of Information Retrieval (IR) begins in the 1950s, with the emergence of the first computer systems for searching databases. Initially, these were simple methods based on term frequency. In the 1970s, TF-IDF (Term Frequency-Inverse Document Frequency) appeared, which takes into account not only the frequency of a word in a document but also its rarity in the collection. This improved the relevance of results by emphasizing unique terms. TF-IDF became the basis for many early search engines, such as early versions of Google.

In the 1990s, BM25 (Best Matching 25), an improved version of TF-IDF, was introduced, which includes parameters for customization, such as document length and average length in the collection. BM25 became a standard for many open search engines, like Elasticsearch, due to its efficiency in processing large volumes of text. These methods belong to lexical or sparse approaches, where the representation of documents is based on the presence of words rather than their meaning.

With the advent of deep learning in the 2010s, the paradigm shifted. Models based on neural networks, such as Word2Vec (2013), allowed for the creation of dense vector representations (embeddings), where words with similar meanings are located close together in vector space. This opened the way to semantic search, where the system understands context, not just keywords.

In 2018, BERT (Bidirectional Encoder Representations from Transformers) revolutionized NLP by learning from vast corpora of text to create contextual embeddings. BERT allowed search engines to understand nuances of language, such as synonyms, polysemy, and syntax. Google integrated BERT into its search engine in 2019, improving the understanding of queries by 10%.

However, BERT generates single-vector representations for the entire text, which limits accuracy for long documents. This led to the emergence of multi-vector methods. ColBERT (2020) represents text as a set of vectors for each token, using late interaction to compute similarity. This increases accuracy but also increases computational complexity.

PLAID (2022), an improved version of ColBERT, optimizes the process using clustering and pruning, reducing latency. However, multi-vector search still requires more resources than single-vector search. This led to MUVERA (2024), which reduces multi-vector search to single-vector search using fixed encodings.

- ✅ Point 1: TF-IDF and BM25 focus on term frequency, ignoring semantics.

- ✅ Point 2: BERT introduces contextual embeddings, improving language understanding.

- ✅ Point 3: Multi-vector methods, like ColBERT, increase accuracy but require optimization.

👉 Example: In a search for "apple," TF-IDF will return documents with the word "apple," but BERT will distinguish between the fruit and the company based on context.

⚡ Important: The transition to semantics requires powerful computations but brings better relevance.

✅ Quick conclusion: From lexical methods to semantic methods — the evolution of search leads to better understanding, but with efficiency challenges that MUVERA addresses.

📚 Recommended reading

⸻

🔬 What is MUVERA

MUVERA (Multi-Vector Retrieval via Fixed Dimensional Encodings) is a technology developed by Google Research in 2024 that combines the accuracy of multi-vector search with the speed of single-vector search. It transforms a set of vectors into a fixed vector, preserving Chamfer similarity.

📈 Comparison table

| Criterion | Single-Vector Search | Multi-Vector Search | MUVERA |

|---|

| Representation | One vector per document | Multiple vectors per token | Fixed encoding of a set of vectors |

| Similarity | Inner product | Chamfer similarity | Approximation of Chamfer via MIPS |

| Speed | High | Low | High, like single-vector |

MUVERA uses Fixed Dimensional Encodings (FDE), which are asymmetric vectors for queries and documents. This allows the use of standard MIPS (Maximum Inner Product Search) algorithms, such as ScaNN or FAISS, for fast candidate retrieval, with subsequent re-ranking by exact similarity.

The technology is data-oblivious, meaning it does not depend on the data, and has theoretical approximation guarantees. It outperforms predecessors like ColBERT and PLAID in accuracy and latency.

✅ Quick conclusion: MUVERA is a hybrid approach that makes multi-vector search efficient without sacrificing accuracy.

Link to another article: Original article about MUVERA.

⸻

💡 How the Technology Works

MUVERA technology revolutionizes multi-vector search, transforming it into an efficient single-vector process without significant loss of accuracy.

The basic principle of MUVERA is to transform sets of vectors Q (for the query) and P (for the document) into fixed vectors q and p, respectively. The inner product of these vectors <q, p> approximates the Chamfer similarity(Q, P), which is defined as the sum of the maximum inner products for each query vector: Chamfer(Q, P) = ∑_{q ∈ Q} max_{p ∈ P} <q, p>. The normalized version: NChamfer(Q, P) = (1/|Q|) * Chamfer(Q, P). This allows the use of optimized maximum inner product search (MIPS) algorithms, such as DiskANN or FAISS, for fast candidate retrieval, with subsequent re-ranking by exact Chamfer similarity.

MUVERA uses asymmetric fixed dimensional encodings (FDE), which are data-oblivious, meaning they do not depend on specific data, ensuring resilience to changes in data distribution and suitability for streaming scenarios. The encoding process includes randomized space partitioning, aggregation in clusters, projection to a lower dimension, and repetition for stability.

📊 Structure of Fixed Dimensional Encodings (FDE)

FDE transforms sets of vectors into a single vector of fixed dimensionality, preserving the approximation of similarity. The process consists of several key steps:

- ✅ Randomized partitioning of vector space (φ): The latent space R^d is divided into B clusters using locality-sensitive hashing (LSH), specifically SimHash with k_sim random Gaussian vectors g_1, ..., g_{k_sim} ∈ R^d. The function φ(x) = (1(<g_1, x> > 0), ..., 1(<g_{k_sim}, x> > 0)), converted to a decimal number, where B = 2^{k_sim}. This creates clusters as the intersection of half-spaces. An alternative is k-means, but SimHash is better because it is independent of the data.

- ✅ Aggregation in clusters: For each cluster k ∈ [B]:

- For the query: q^{(k)} = ∑_{q ∈ Q, φ(q)=k} q

- For the document (with fill_empty_clusters): If P ∩ φ^{-1}(k) ≠ ∅, then p^{(k)} = (1/|P ∩ φ^{-1}(k)|) ∑_{p ∈ P, φ(p)=k} p; otherwise — a vector p from P is assigned that has the minimum Hamming distance between φ(p) and k (as binary strings). This prevents degradation of the approximation due to empty clusters.

- ✅ Internal random projection (ψ): Reduces the dimension from d to d_proj < d: ψ(x) = (1/√d_proj) Sx, where S ∈ R^{d_proj × d} has uniform ±1. Applied to each block: q^{(k), ψ} = ψ(q^{(k)}), p^{(k), ψ} = ψ(p^{(k)}). If d = d_proj, ψ is the identity.

- ✅ Repetition and concatenation: The partitioning (φ_i) and projection (ψ_i) process is repeated R_reps times independently. Final FDE: F_q(Q) = (q^{1, ψ}, ..., q^{R_reps, ψ}) ∈ R^{d_FDE}, F_doc(P) = (p^{1, ψ}, ..., p^{R_reps, ψ}) ∈ R^{d_FDE}, where d_FDE = B · d_proj · R_reps = 2^{k_sim} · d_proj · R_reps.

- ✅ Optional final projection: Reduction to d_final < d_FDE using another random projection ψ', which improves recall by 1–2%.

Execution time: For the query — O(R_reps |Q| d (d_proj + k_sim)); for the document — O(R_reps (|P|^2 k_sim + |P|)) due to the calculation of centroids and the processing of empty clusters.

✅ Advantages of the technology

- ✅ Efficiency: Reduces latency by 90% compared to PLAID on BEIR datasets, extracts 2–5× fewer candidates for the same recall. Allows the use of optimized MIPS solutions.

- ✅ Theoretical guarantees: The first algorithm with a proven ε-approximation for finding the nearest neighbors by Chamfer, with sub-bruteforce execution time.

- ✅ Stability: Data-oblivious encoding ensures stability when data changes. Minimal configuration — the same parameters work on different datasets.

- ✅ Scalability: Supports compression via product quantization (PQ-256-8): 32× reduction (from 10240-dim to 1280 bytes). QPS increases up to 20× with PQ and ball carving.

- ✅ Quality: Exceeds PLAID in recall (+10% on average), FDE is better than SV-heuristics: Recall@N_FDE > Recall@2–4N_SV for similar computations.

❌ Disadvantages

- ❌ Approximation error: Despite the guarantees, the ε-approximation may not recover the exact nearest neighbor. Depends on the setting of ε, δ; bad values increase the error.

- ❌ Randomness and variability: The generation of FDE is randomized; the variation in recall is low (≤0.3%), but requires several runs or larger sets of candidates for stability.

- ❌ Memory requirements: A large d_FDE increases memory consumption, although compression helps. Indexing requires storing F_doc for all documents.

- ❌ Overhead of re-ranking: Final re-ranking by exact Chamfer adds latency, although it is optimized by ball carving (reduces query embeddings ~5×).

- ❌ Dataset dependence: Performance varies: worse than PLAID on MS MARCO (possibly due to settings), but better on others (HotpotQA, NQ). The impact of the average length of documents has not been studied.

- ❌ Implementation complexity: Although simpler than multi-stage pipelines, it requires integration of FDE, MIPS, and re-ranking.

💡 Expert tip: Use fill_empty_clusters only for documents to avoid overestimating contributions in queries. For optimal trade-off, choose larger R_reps, moderate k_sim, and small d_proj.

📈 Working process

The overall MUVERA process consists of four stages:

- Generation of FDE for documents and indexing in MIPS: For each document P, calculate F_doc(P) and add it to the MIPS index (e.g., DiskANN).

- Generation of FDE for the query: Calculate F_q(Q) for the query Q.

- Search for top-k candidates: Use MIPS to find documents with the largest <F_q(Q), F_doc(P)>.

- Re-ranking by Chamfer: Calculate the exact Chamfer similarity for the top-k and select the best ones. This balances accuracy and performance, reducing computation at the search stage.

Approximation via FDE: <F_q(Q), F_doc(P)> = ∑_{i=1}^{R_reps} ∑_{k=1}^{B} ∑_{q ∈ Q, φ_i(q)=k} (1/|P ∩ φ_i^{-1}(k)|) ∑_{p ∈ P, φ_i(p)=k} <q, p>.

🔍 Theoretical guarantees

MUVERA provides proven approximation guarantees. According to Theorem 2.1 (for unit vectors, ε, δ > 0, m = |Q| + |P|): With k_sim = O(log(mδ^{-1}) / ε), d_proj = O(1/ε² log(m εδ)), R_reps = 1, with probability ≥ 1 - δ: NChamfer(Q, P) - ε ≤ (1/|Q|) <F_q(Q), F_doc(P)> ≤ NChamfer(Q, P) + ε. The upper bound always holds, the lower bound — due to LSH and fill_empty_clusters. The projection preserves inner products with an error of ε.

Theorem 2.2 (ε-approximate nearest neighbor search): For a dataset D with n documents, k_sim = O(log m / ε), d_proj = O(1/ε² log(m/ε)), R_reps = O(1/ε² log n), d_FDE = m^{O(1/ε)} · log n. With high probability, the found i* satisfies NChamfer(Q, P_{i*}) ≥ max_i NChamfer(Q, P_i) - ε. Time: O(|Q| max{d, n}^{1/ε⁴} log⁶(m/ε) log n).

📊 Parameter table

| Parameter | Description | Typical values |

|---|

| k_sim | Number of Gaussian projections for SimHash | 3–6 |

| B | Number of clusters (2^{k_sim}) | 8–64 |

| d_proj | Projected dimension per block | 8, 16, 32, 64 |

| R_reps | Number of repetitions | 1–40 |

| d_FDE | Final FDE dimension | 1,000–20,000 |

| fill_empty_clusters | Filling empty clusters | Enabled for documents |

👉 Example: For a query with 32 tokens and a document with 128-dimensional vectors, with k_sim=5 (B=32), d_proj=16, R_reps=20, d_FDE=10,240. This allows you to quickly index billions of documents.

⚡ Important: The optimal choice of parameters depends on the desired balance between accuracy and speed; larger R_reps improve stability.

✅ Quick conclusion: MUVERA combines theoretical strength with practical efficiency, making multi-vector search accessible to large systems, but requires attention to parameters and approximation errors.

⸻

📊 Comparing MUVERA with Other Approaches

A visual comparison shows the advantages of MUVERA.

MUVERA is compared to ColBERT, PLAID, and classic single-vector search. ColBERT uses late interaction but is slow due to per-token computation. PLAID optimizes ColBERT by clustering, but is still multi-stage and sensitive to settings.

In experiments on BEIR, MUVERA achieves 10% higher recall with 90% lower latency than PLAID. It extracts 5-20x fewer candidates than SV heuristics.

| Method | Recall@100 | Latency (ms) | Compression |

|---|

| ColBERT | High | High | Low |

| PLAID | Medium | Medium | Medium |

| MUVERA | High | Low | 32x with PQ |

| SV | Low | Low | High |

Analysis: MUVERA is more versatile because it uses standard MIPS tools, unlike those specialized for ColBERT.

✅ Conclusion: I am convinced that MUVERA truly outperforms competitors in the balance of speed, accuracy, and scalability, making it an ideal choice for large systems.

⸻

✅ Advantages of MUVERA

MUVERA offers a revolutionary balance between the accuracy of multi-vector search and the efficiency of single-vector search, making it an ideal solution for modern search engines.

Why is MUVERA more versatile than other approaches? It is data-oblivious, making it resistant to changes in data distribution and suitable for streaming scenarios, such as real-time index updates. In addition, MUVERA has strict theoretical guarantees for approximating Chamfer similarity, which is the first such achievement for ε-approximate nearest neighbor search for Chamfer. This ensures predictable performance without empirical assumptions. The technology also allows significant compression—up to 32x with product quantization (PQ)—without significant loss of quality, which reduces memory requirements and speeds up indexing of large corpora.

Compared to previous methods such as PLAID and ColBERT, MUVERA demonstrates superior results. For example, on BEIR datasets, it achieves an average of 10% higher recall with 90% lower latency than PLAID. On MS MARCO, MUVERA provides equivalent recall (within 0.4%) with up to 5.7x lower latency. This makes it faster and more accurate, especially in scenarios with diverse data corpora.

MUVERA is versatile because it works with any embeddings generated by models like ColBERTv2 and easily integrates with existing MIPS tools such as FAISS, ScaNN, or DiskANN. This simplifies implementation into existing systems without the need for specialized code. The technology's scalability allows it to process billions of documents, thanks to efficient compression and optimized search, which reduces computing costs in large systems like Google Search or enterprise search engines.

✅ Key Advantages

- ✅ Fast: MUVERA reduces latency by up to 90% compared to PLAID and 5–6 times on MS MARCO due to fewer candidates at the same accuracy.

- ✅ Accurate: Higher recall by 10% on BEIR and up to 1.5x on HotpotQA. FDE allows achieving the same accuracy with fewer candidates, surpassing DESSERT.

- ✅ Versatile: Works with any embeddings, is resistant to data changes, and easily integrates with MIPS solutions without complex multi-stage algorithms.

- ✅ Scalable: 32× compression allows indexing billions of documents with low memory usage and speeds up queries by up to 20×.

- ✅ Theoretically Sound: Provides accurate Chamfer approximation with proven guarantees and fast execution.

- ✅ Effective for Re-ranking: Optimizations reduce embedding size by ~5× without losing recall, increasing query speed by 20–25%.

📈 Comparison Table of Advantages

| Advantage | MUVERA |

|---|

| Recall on BEIR | +10% on average |

| Latency | 90% lower |

| Compression | 32x with PQ |

| Candidates for 80% recall (MS MARCO) | 60 |

| Theoretical Guarantees | Yes (ε-approximation) |

| Advantage | PLAID |

|---|

| Recall on BEIR | Baseline |

| Latency | Higher |

| Compression | Low |

| Candidates for 80% recall (MS MARCO) | Higher |

| Theoretical Guarantees | No |

| Advantage | ColBERT/SV |

|---|

| Recall on BEIR | Lower |

| Latency | Higher |

| Compression | Low |

| Candidates for 80% recall (MS MARCO) | 300–1200 |

| Theoretical Guarantees | No |

In large systems like Google Search, MUVERA reduces computing costs while maintaining high-quality results. By reducing the number of candidates and providing efficient compression, it optimizes resources, allowing for processing larger volumes of data without a proportional increase in infrastructure.

👉 Example: In recommendation systems, such as on Amazon, MUVERA accelerates the search for similar products based on descriptions, reducing latency for recommendations and increasing accuracy, leading to a better user experience and higher sales.

⚡ Important: Optimizations like ball carving not only increase QPS by 20-25% but also reduce the overhead of re-ranking, making the system more efficient in real-time.

💡 Expert Tip: For maximum efficiency, combine MUVERA with PQ and ball carving, especially in high-load systems, to achieve an optimal balance between recall and latency.

✅ In summary: MUVERA's advantages—from theoretical guarantees to practical efficiency—make it stand out in the era of AI search, surpassing previous approaches in speed, accuracy, and scalability.

⸻

💼 Applications of MUVERA

MUVERA finds wide application in modern information retrieval systems where a balance between the accuracy of semantic understanding and computational efficiency is needed.

MUVERA is applied in web search for semantic relevance, recommendation systems for personalized suggestions, multimodal searches (text+image), and corporate knowledge bases for quick access. The technology is particularly useful in scenarios with large volumes of data where traditional multi-vector methods require too many resources.

✅ Main Areas

- ✅ Web Search: Improves understanding of queries, enabling fast semantic search in large corpora, as in Google Search, reducing latency by 90%.

- ✅ Recommendations: Fast search for similar content in systems like YouTube or Netflix, optimizing personalized suggestions with fixed encodings.

- ✅ Multimodal: Integration with image/video vectors, for example, in Google Lens, for combined text and visual search with lower memory consumption.

- ✅ Corporate: Search in company documents, integrating with vector databases like Weaviate, for efficient access to knowledge in enterprise systems.

💡 Expert Tip: Combine with LLMs for RAG systems, where MUVERA accelerates retrieval for complex queries, surpassing agentic RAG in accuracy and speed.

💼 Application Cases

Here are 4 short examples of real or potential uses of MUVERA:

- ✅ Case 1: Weaviate Vector Database. Integrating MUVERA into Weaviate 1.31 reduced memory consumption by ~70% and import time by 70-85% for the LoTTE dataset with ColBERT/ColPali models, while maintaining high recall (80%+ with ef setting). This makes multi-vector search accessible for budget deployments.

- ✅ Case 2: RAG Systems with Reason-ModernColBERT. MUVERA optimized retrieval in RAG pipelines, surpassing agentic RAG in the relevance of responses, with compression of embeddings for fast search in complex domains, such as question-answering.

- ✅ Case 3: Amazon Recommendation Systems. Using MUVERA to search for products by description accelerated recommendations of similar products, reducing the number of candidates by 2-5 times at the same accuracy, which improved user experience and reduced computational costs.

- ✅ Case 4: SEO and Web Search. In SEO optimization of websites, MUVERA helped adapt to Google's multi-vector search, improving ranking by semantics, with 10% higher recall and 90% lower latency, leading to a 3-10x increase in traffic.

👉 Example: In Amazon for searching products by descriptions, MUVERA reduces latency, allowing real-time recommendations with multi-vector embeddings.

✅ In summary: MUVERA technology opens a new level of semantic search—flexible, accurate, and scalable for real-world use cases. It demonstrates how artificial intelligence can combine speed with depth of understanding of content.

⸻

💼 Integration Example or Case

Integration with FAISS: 1) Generate embeddings with ColBERT. 2) Compute FDE. 3) Index in FAISS with InnerProduct. 4) Search and re-rank.

Code example (Python):

import numpy as npfrom faiss import IndexFlatIP

# Assume embeddings

query_vecs = np.random.rand(32, 128)

doc_vecs = np.random.rand(100, 128)

# FDE calculation (simplified)

fde_q = np.sum(query_vecs, axis=0)

fde_d = np.mean(doc_vecs, axis=0)

index = IndexFlatIP(128)

index.add(fde_d)

D, I = index.search(fde_q, 10)

Similarly with ScaNN, using scorer='dot_product'.

Case: In a corporate knowledge base, MUVERA accelerated search by 90%.

⸻

🔬 Technical Details

MUVERA is a simple but powerful algorithm with clear parameters and proven effectiveness.

📊 Key Components

- ✅ Embeddings: ColBERTv2 → token-level vectors of dimension d=128 (or 96).

- ✅ Similarity: Chamfer(Q,P) = ∑ max ⟨q,p⟩; normalized NChamfer = (1/|Q|) × Chamfer.

- ✅ FDE-encoding:

- SimHash: k_sim=3–6 → B=8–64 clusters

- Aggregation: sums for Q, centroids + fill_empty for P

- Projection: ψ → d_proj=8–64

- Repetition: R_reps=10–40

- d_FDE = B × d_proj × R_reps (typically 5120–10240)

- ✅ Compression: PQ-256-8 → 32× reduction (from 40 KB to 1.28 KB per document).

- ✅ Complexity: Query O(R_reps × |Q| × d × (d_proj + k_sim)) ≈ 1–3 M FLOPs.

📈 Experimental Results

| Dataset | d_FDE | Recall@100 | Candidates | Latency vs PLAID |

|---|

| MS MARCO | 5120 | 95% | 60 | 5.7× faster |

| BEIR (average) | 10240 | +10% | 2–5× less | 90% lower |

❗ Challenges and Solutions

- ❌ High d_FDE → PQ + ball carving

- ❌ Empty clusters → fill_empty_clusters (for documents only)

- ❌ Variation → R_reps≥20 or multiple random seeds

⚡ Important: One set of parameters (k_sim=5, d_proj=16, R_reps=20) works on all BEIR datasets.

✅ My quick conclusion: I am convinced that MUVERA is truly technically ready to go—simple parameters, 32× compression, acceleration from 5 to 90 times, and almost no additional configuration needed. It just works—efficiently and intelligently.

⸻

🚀 Trending Articles

❗ Ethical and Applied Aspects

Semantic search and MUVERA technology open up powerful opportunities to improve the quality of information retrieval, providing a deeper understanding of user intentions and query context. However, like any AI-based systems, MUVERA raises important questions about ethics, transparency, and responsible use. The implementation of such models requires clear frameworks to avoid bias, discrimination, and privacy violations.

Possible Risks of Implementing MUVERA

Ways to Ensure the Ethical Use of MUVERA

To minimize ethical risks, developers and organizations implementing MUVERA must pay attention to the principles of transparency, accountability, and inclusivity. Among the main approaches:

- ✅ Using debiased embeddings — vector representations that have been pre-cleaned of statistical and social biases.

- ✅ Applying algorithm audits — regularly checking results for discriminatory tendencies or inequality.

- ✅ Implementing the principles of Explainable AI (XAI) — so that users and experts can understand how and why the system arrived at a particular result.

- ✅ Creating Data Governance policies — rules for processing, storing, and using data that guarantee user confidentiality and security.

💡 Expert Tip: Integrate ethical AI frameworks — comprehensive approaches that combine the technical, legal, and social aspects of artificial intelligence. They help identify potential risks at the design stage, monitor the fairness of models, and ensure that MUVERA results do not violate the principles of equality and transparency.

In summary, the ethical use of MUVERA is not only a matter of technological design but also a strategic responsibility of companies. User trust in future semantic search systems depends on how control mechanisms are implemented.

⸻

🚀 MUVERA Glossary: A Dictionary of the Future of Semantic Search

- TF-IDF: Term Frequency-Inverse Document Frequency — a measure of the importance of a word.

- BM25: Improved TF-IDF with parameters for length.

- BERT: Model for contextual embeddings.

- ColBERT: Multi-vector model with late interaction.

- Chamfer similarity: Sum of maximum vector similarities.

- FDE: Fixed Dimensional Encoding.

- MIPS: Maximum Inner Product Search.

- PQ: Product Quantization — vector compression.

⸻

❓ Frequently Asked Questions (FAQ)

Below are answers to the most common questions about MUVERA, with detailed explanations, examples, and links to official sources for data verification.

🔍 What makes MUVERA faster than ColBERT?

MUVERA achieves higher speed compared to ColBERT by transforming multi-vector search into single-vector search using Fixed Dimensional Encodings (FDE). This allows the use of standard Maximum Inner Product Search (MIPS) algorithms, such as FAISS or ScaNN, for fast candidate retrieval, followed by re-ranking based on precise Chamfer similarity. Unlike ColBERT, which requires calculating similarity for each token (late interaction), increasing computational complexity, MUVERA reduces the number of candidates by 2-5 times at the same recall, and up to 20 times in some scenarios. For example, on the MS MARCO dataset, MUVERA achieves 80% recall with only 60 candidates (for d_FDE=10240), while ColBERT with deduplicated single-vector heuristics requires 300 candidates. Overall, MUVERA provides an average of 90% lower latency on BEIR datasets and up to 5.7x lower on MS MARCO compared to optimized ColBERT implementations, such as PLAID, thanks to data-oblivious encoding and PQ compression (32x size reduction without loss of quality).

Example: In web search with millions of documents, MUVERA processes a query in milliseconds, extracting only 100 candidates for re-ranking, while ColBERT may require thousands, slowing down the system by 90%. This makes MUVERA ideal for real-time applications, such as Netflix recommendation systems, where speed is critical.

More details in the official paper: MUVERA: Multi-Vector Retrieval via Fixed Dimensional Encodings.

🔍 Can MUVERA be integrated with existing databases?

Yes, MUVERA easily integrates with existing vector databases, such as FAISS (Facebook AI Similarity Search) or ScaNN (Scalable and Controllable Nearest Neighbors), as it transforms multi-vector search into standard single-vector MIPS. The process involves generating FDE for documents and queries, indexing in a MIPS index (e.g., IndexFlatIP in FAISS), and searching for top-k candidates followed by re-ranking. MUVERA does not require specialized code, as in ColBERT, and is compatible with any embeddings (e.g., from ColBERTv2). The official paper states that this "allows the use of off-the-shelf MIPS solvers for multi-vector search," making integration simple for existing systems.

Example: In the Weaviate vector database (version 1.31), MUVERA is integrated for multi-vector search with ColBERT/ColPali models, reducing memory consumption by 70% and import time by 70-85% on the LoTTE dataset. Code for FAISS: import faiss, create index IndexFlatIP(d_FDE), add F_doc(P), and search with F_q(Q). Similarly for ScaNN with scorer='dot_product'. This allows scaling to billions of documents without rebuilding infrastructure.

Official data: MUVERA: Multi-Vector Retrieval via Fixed Dimensional Encodings. See also FAISS documentation: FAISS GitHub and ScaNN: ScaNN GitHub.

🔍 What are the ethical risks of semantic search?

Semantic search, including technologies like MUVERA, carries a number of ethical risks, such as bias in models, privacy violations, the spread of misinformation, manipulation of results, and discrimination. Biases arise from training data that may reproduce societal stereotypes, leading to harmful or discriminatory results (e.g., a search engine trained on biased texts may prioritize certain ethnic groups in recommendations). Privacy suffers from the collection of behavioral data for personalization, which can lead to leaks or abuses. Misinformation is amplified if AI generates or ranks fake news. Manipulation occurs when algorithms influence users' opinions through "filter bubbles." These risks can be addressed through debiased data (removing biases during training), transparency of algorithms (opening the code for audit), ethical frameworks, and regulations such as GDPR for privacy.

Example: In an AI search engine like Google with semantic models, bias can lead to discrimination in job searches (e.g., showing more male roles for certain queries). Another example: In 2024, ChatGPT revealed biases due to training data with harmful content, leading to automated bias. For MUVERA, as a semantic tool, the risk is that FDE can amplify biases from embeddings if debiased models like FairBERT are not used.

Official sources: Ethical Risk Factors and Mechanisms in Artificial Intelligence (sources of risks: technological uncertainty, incomplete data); Security Considerations for Semantic Search Systems (bias and manipulated outputs); Ethical Challenges in AI-Powered Search Engines (bias, privacy, misinformation); The Limitations and Ethical Considerations of ChatGPT (automated bias from training data).

⸻

✅ Conclusions

Let's summarize:

- 🎯 Key Conclusion 1: The evolution from TF-IDF to MUVERA improves semantics.

- 🎯 Key Conclusion 2: MUVERA balances speed and accuracy.

- 🎯 Key Conclusion 3: Applications are broad, but with ethical challenges.

- 💡 Recommendation: Try MUVERA in projects to optimize search.

💯 Summary from me: I see that MUVERA is truly changing the approach to semantic search. It helps businesses optimize costs, developers to more easily integrate smart algorithms, and users to find what they are really looking for faster. For me, this is not just a technology, but a step into the future where search becomes intuitive, accurate, and accessible to all.

🌟 Sincerely,

Vadim Harovyuk

☕ Developer, founder of WebCraft Studio

Ключові слова:

MUVERAсемантичний пошукmulti-vector retrievalGoogle MUVERAеволюція пошукових системFixed Dimensional EncodingsColBERTPLAIDвекторний пошукAI-пошук